2017 'Attention Is All You Need' Paper Revolutionized AI, Powering Modern Models

The fundamental architecture underpinning nearly all modern artificial intelligence models, including leading platforms like ChatGPT, Claude, Gemini, and Grok, is the Transformer. This groundbreaking neural network design, introduced in a pivotal 2017 paper, marked a significant turning point in AI's ability to understand and generate language. Y Combinator's Ankit Gupta recently highlighted this evolution, tracing AI's journey from earlier sequential models to the current era.

Before the Transformer, AI models like Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTMs) grappled with processing sequential data efficiently. These older architectures processed information one element at a time, hindering parallel computation and struggling to maintain context over long sequences. The introduction of attention mechanisms provided an initial improvement, allowing models to weigh the importance of different parts of an input.

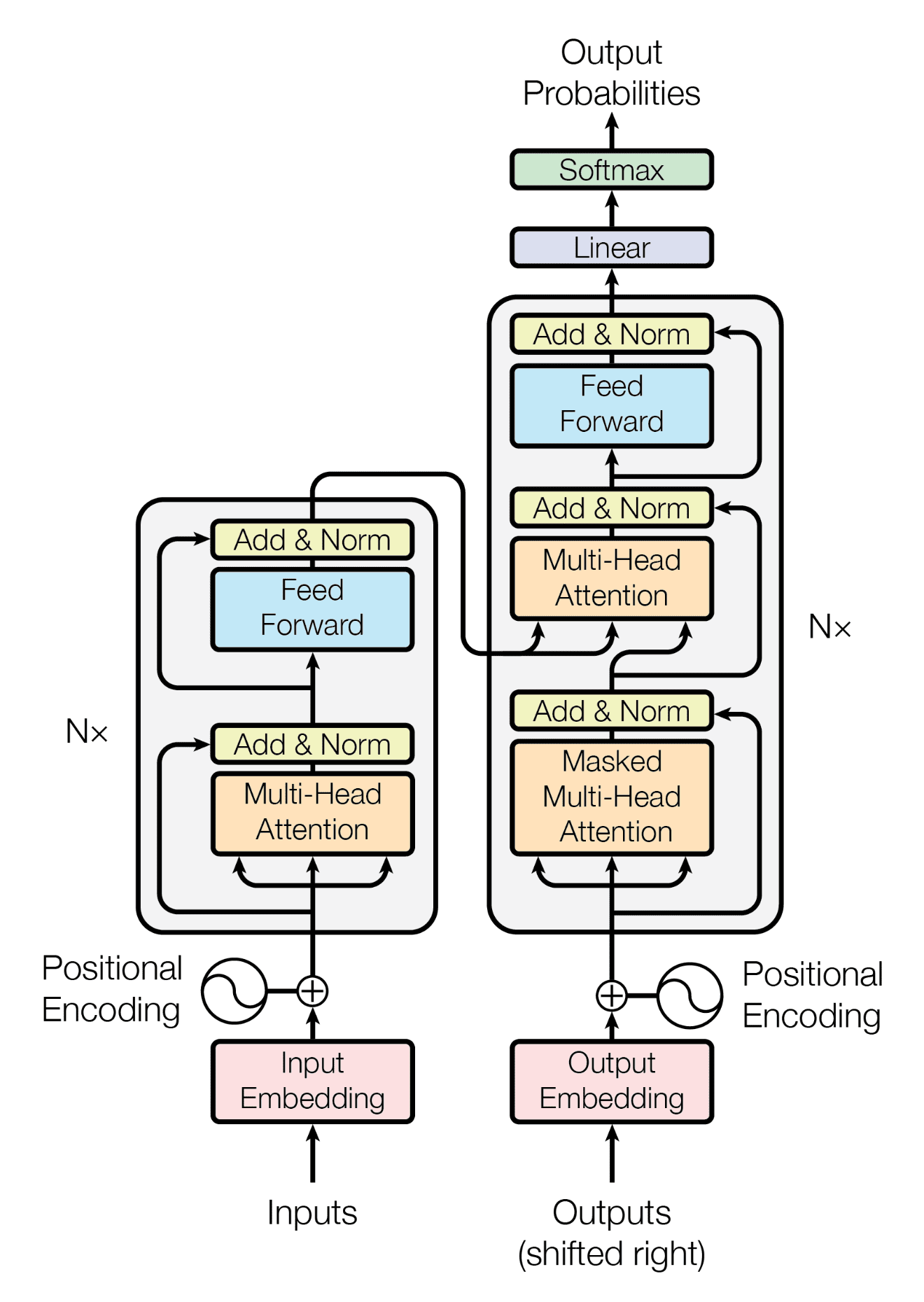

The true breakthrough arrived with the 2017 paper, "Attention Is All You Need," authored by researchers at Google. This seminal work proposed an architecture that entirely eliminated recurrence, relying solely on the self-attention mechanism. This innovation enabled parallel processing of entire input sequences, drastically improving training speed and the model's capacity to capture long-range dependencies within data.

As Y Combinator's Ankit Gupta detailed, this discovery "unlocked the modern AI era." The Transformer's ability to process data in parallel and understand complex relationships within sequences made it highly scalable and efficient. This efficiency allowed for the development of significantly larger and more capable language models.

The Transformer architecture quickly became the backbone for a new generation of AI, extending its influence far beyond natural language processing. It now powers advancements in computer vision, speech recognition, and various generative AI applications. Its versatility and robust performance has cemented its status as the foundational technology for today's most sophisticated AI systems.