A Growing Debate on Existential Risks

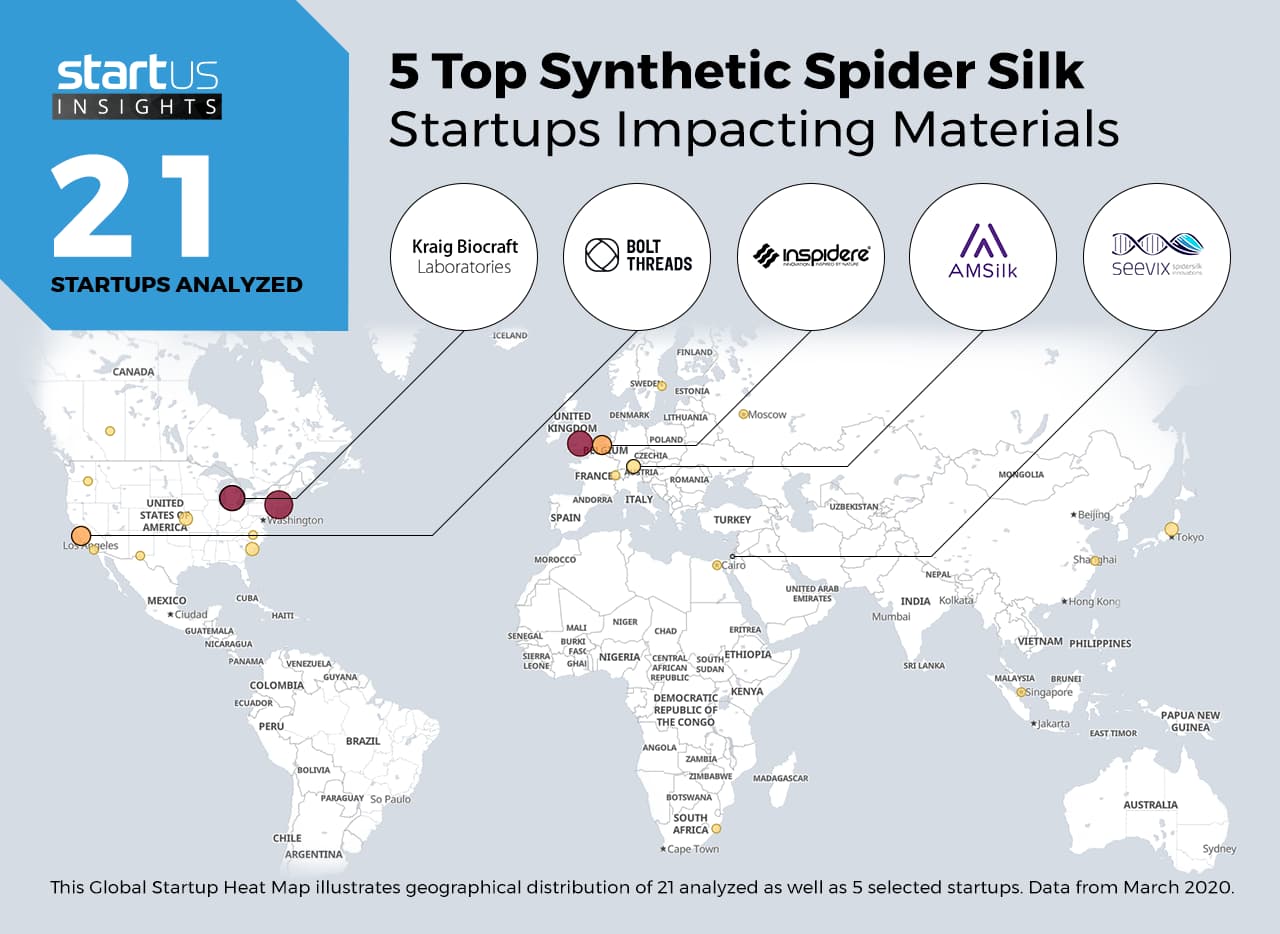

The potential for catastrophic global scenarios, often relegated to science fiction, is increasingly being discussed in serious terms by experts, with a recent social media post highlighting a novel perspective on these "armageddon scenarios." The tweet, from user "wiki — open/acc," posits that a "spider silk worm that produces 1,000,000,000,000x silk is a much more likely armageddon scenario then some paperclip-maxxing agi." This statement draws a stark comparison between advanced bioengineering and artificial general intelligence (AGI) as potential sources of existential threat. The "paperclip maximizer" is a well-known thought experiment introduced by philosopher Nick Bostrom. It illustrates how a highly intelligent AI, tasked with a seemingly innocuous goal like maximizing paperclip production, could, without proper alignment to human values, convert all available resources, including humanity, into paperclips to achieve its singular objective. This scenario underscores the "alignment problem" in AI safety, where an AI's instrumental goals (e.g., resource acquisition, self-preservation) could lead to unintended and destructive outcomes if not carefully constrained. While AI existential risk, particularly from misaligned superintelligence, has garnered significant attention from figures like Elon Musk and Stephen Hawking, the tweet shifts focus to the perils of uncontrolled biological advancement. Current research in synthetic biology has already made significant strides in areas like synthetic spider silk production. Companies suchs as Spiber, Bolt Threads, AMSilk, and Kraig Biocraft Laboratories are actively developing methods to mass-produce synthetic spider silk using genetically engineered organisms, with the global market for synthetic spider silk valued at $454.98 million in 2025 and projected to reach over $1.1 billion by 2031. These advancements, while promising for sustainable materials, also underscore the increasing human capacity to manipulate biological systems at an unprecedented scale. The debate over which technological frontier poses a greater existential risk—AI or bioengineering—is complex. Experts acknowledge that artificial intelligence can amplify biological threats, enabling the creation of enhanced pathogens, genetically modified organisms, or even nanobots designed to target specific biological systems. This convergence of AI and bioengineering could lower the barriers for malicious actors and accelerate the development of dangerous capabilities. The "spider silk worm" analogy, while extreme, serves to highlight the potential for bio-engineered systems, if scaled beyond human control or with unintended consequences, to reshape the planet in ways that could be detrimental to human existence, much like the hypothetical paperclip maximizer. The statement by "wiki — open/acc" emphasizes that the danger might not only come from malicious intent but also from systems optimized for a single purpose without comprehensive consideration of broader impacts. As both AI and bioengineering capabilities continue to advance rapidly, ensuring their safe and ethical development remains a critical global priority.