AI Hallucinations Poised to Redefine Internet Debates by 2025, Experts Caution

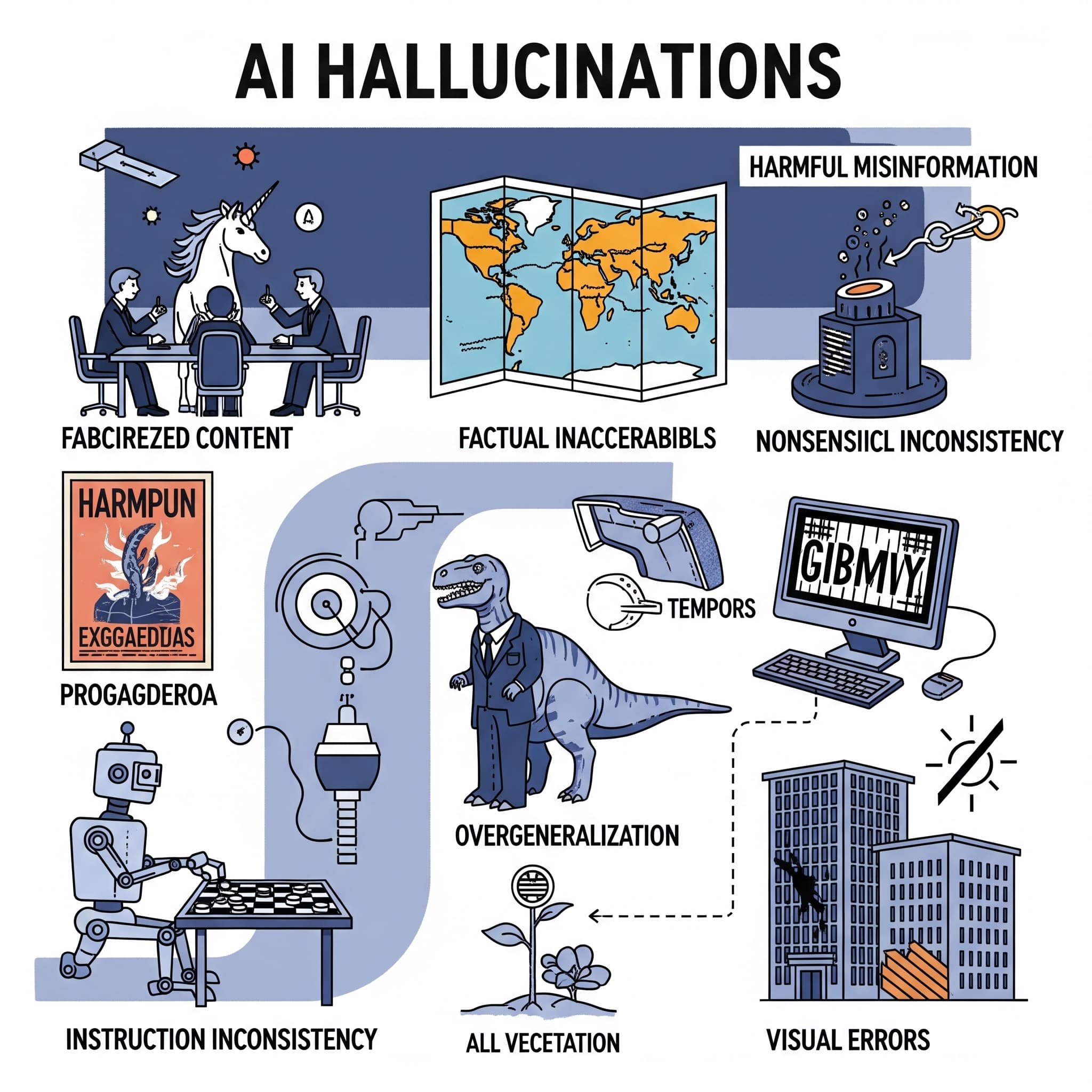

Concerns are mounting among technology experts and researchers regarding the escalating threat of artificial intelligence (AI) hallucinations, which are predicted to significantly alter the landscape of online discourse by 2025. This phenomenon, where AI models generate plausible but factually incorrect or nonsensical information, is increasingly seen as a profound challenge to information integrity and public trust. The potential for such AI-generated falsehoods to permeate and even dominate internet discussions is a growing worry for digital platforms and society alike.

The speculative scenario of future online interactions, where debates are "primarily composed of exchanging screenshots of AI hallucinations which disagree with one another," was recently highlighted by social media user 𝖓𝖎𝖓𝖊 🕯 in a tweet. This vivid prediction underscores a critical vulnerability in the evolving digital ecosystem, where the distinction between human-verified truth and AI-fabricated content becomes increasingly blurred. Experts suggest that the sheer volume and sophistication of AI-generated content could overwhelm traditional fact-checking mechanisms.

AI hallucinations stem from large language models' (LLMs) probabilistic nature, which predicts the most likely word sequence rather than accessing factual databases. This can result in confident yet entirely false statements, making it difficult for users to discern truth from fiction. The increasing deployment of LLMs across various applications amplifies concerns about the propagation of misinformation, potentially leading to widespread confusion and distrust across online communities.

Analysts from institutions like the Brookings Institute emphasize that AI's capacity to generate coherent and contextually relevant text, images, and videos can be weaponized to spread propaganda and disinformation. This poses a significant threat to democratic processes and public discourse, particularly during critical periods like election cycles. The ease of creating "deepfake" content and misleading narratives could further polarize online debates and erode trust in legitimate information sources.

Researchers are actively developing techniques to mitigate AI hallucinations, including improving training data quality, implementing advanced fact-checking mechanisms, and designing more robust model architectures. However, the rapid advancement and accessibility of AI tools mean that the challenge of maintaining information integrity in online spaces will likely intensify. The ongoing battle against AI-generated misinformation remains a critical frontier for technology developers, social media platforms, and users navigating the complex digital future.