AI Prompting: Expert Declares Practice More Art Than Science, Questions Chain of Thought Efficacy

Leading AI expert Ethan Mollick recently sparked discussion on social media, asserting that the current state of artificial intelligence prompting remains "much more art than science." In a candid post, Mollick, a professor at the Wharton School, highlighted that many widely taught prompting techniques are either "best guesses or based on obsolete information," specifically citing that "chain of thought no longer helps much."

Mollick is a distinguished authority in the field of AI, recognized for his extensive research and practical insights into large language models and their applications across various sectors. His commentary often provides a grounded perspective on the rapidly evolving AI landscape, making his observations on prompt engineering particularly noteworthy for practitioners and researchers alike.

The assertion that prompting is more art than science underscores the lack of standardized, rigorously tested methodologies in the field. Effective prompting often relies on intuition, iterative refinement, and a deep understanding of a specific model's nuances rather than universally applicable rules. This approach contrasts with the scientific method, where hypotheses are systematically tested and replicated.

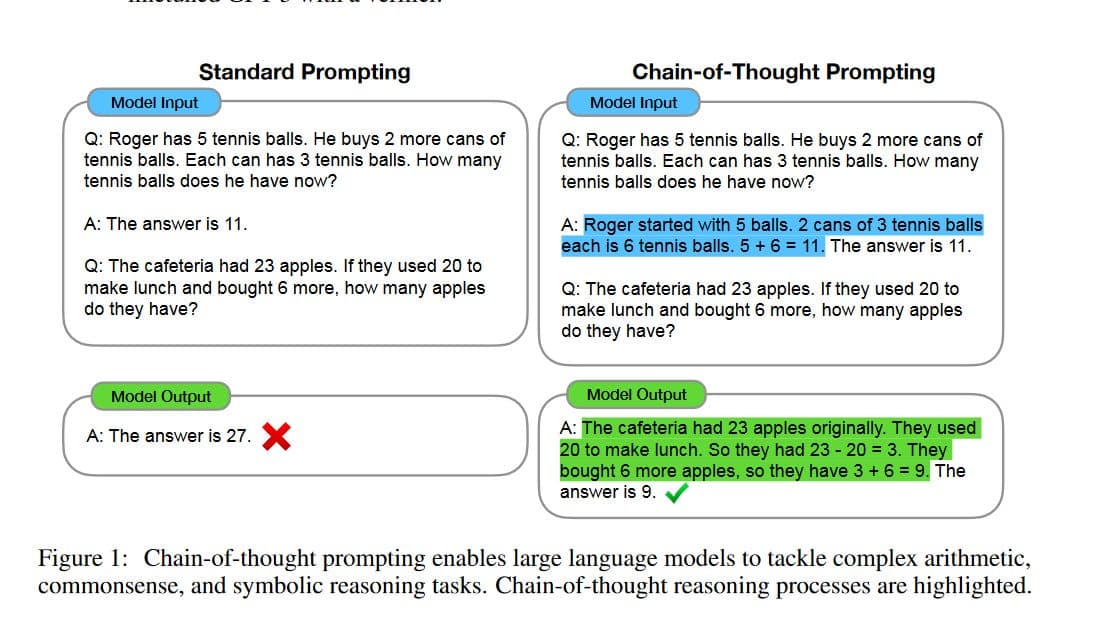

A key point of Mollick's critique targets Chain of Thought (CoT) prompting, a technique that gained prominence for improving LLM performance on complex reasoning tasks by encouraging step-by-step thinking. While CoT was revolutionary, its diminishing utility, as suggested by Mollick, points to the rapid advancements in underlying AI models and the emergence of more sophisticated prompting paradigms.

Indeed, the AI community is actively exploring advanced techniques such as Tree-of-Thought, Self-Consistency, and Retrieval Augmented Generation (RAG), which often build upon or supersede the foundational aspects of CoT. These newer methods aim to enhance reasoning, factual accuracy, and overall model performance by incorporating more complex reasoning structures or external knowledge bases.

Mollick's perspective highlights the dynamic and experimental nature of interacting with advanced AI systems. His insights serve as a crucial reminder for developers and users to continuously adapt their strategies, move beyond outdated practices, and embrace ongoing experimentation to unlock the full potential of large language models in an ever-changing technological environment.