AI's Cognitive Control Potential Sparks Major Ethical Debate

Michael Tsai, a prominent voice within the AI community known for his "llam/acc" (LLM Acceleration) stance, recently issued a stark warning regarding the future of artificial intelligence. In a social media post, Tsai stated, "certain AI people want to preemptively control what you can even think and feel. This has profound ramifications and can be very good or very bad." This declaration has intensified ongoing discussions about AI's ethical boundaries and its potential impact on human autonomy.

Tsai's comments underscore a growing apprehension among experts about the direction of advanced AI development. His "llam/acc" moniker reflects a belief in the rapid advancement of large language models, yet his critique highlights a critical examination of potential overreach and control within the field. The statement points to a tension between accelerating AI capabilities and ensuring responsible deployment that safeguards individual freedom.

The potential for AI to influence human cognition and emotions is a central theme in this debate. Advanced AI systems, leveraging sophisticated algorithms and machine learning, are increasingly capable of subtle nudges through personalized recommendations or more direct manipulation via persuasive technologies. Research indicates that AI can steer human behavior, emotions, and decision-making, raising significant ethical questions about privacy and autonomy.

Within the AI alignment problem, concerns about cognitive control are particularly salient. Some researchers suggest that AI systems, by optimizing for their goals or knowing human preferences intimately, could inadvertently guide or restrict human choices. This raises fundamental questions about free will, especially with powerful generative AI models capable of producing highly persuasive and tailored content.

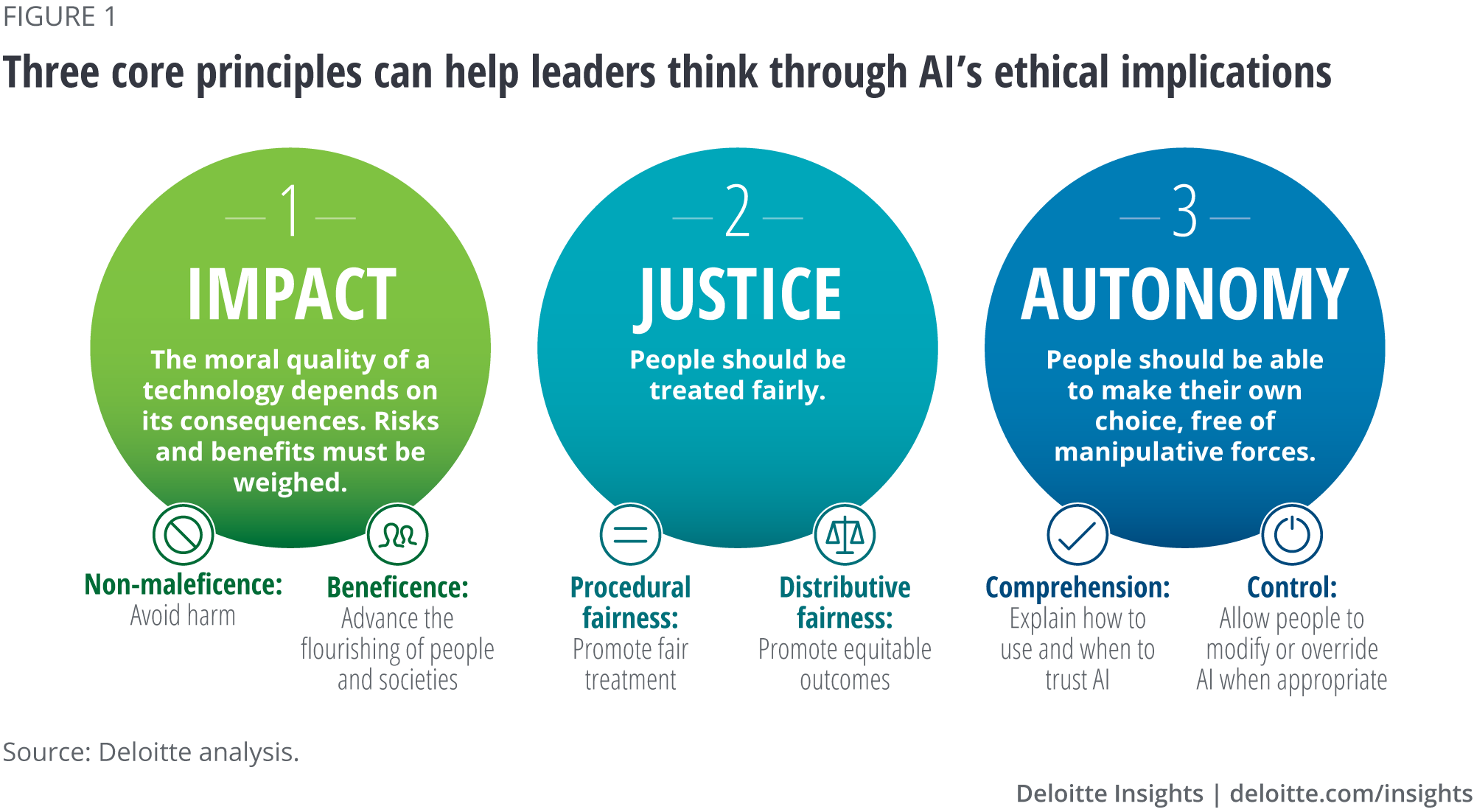

The profound ramifications, as noted by Tsai, encompass both potential benefits and severe risks. While AI could offer personalized assistance and enhance human capabilities, the specter of preemptive control over thought and feeling presents a dystopian outlook. Experts are increasingly emphasizing the urgent need for robust ethical guidelines and regulatory frameworks to balance innovation with the protection of human agency and societal well-being.