DeepSeek-R1's GRPO Algorithm Boosts LLM Reasoning Length and Accuracy, Addressing PPO Limitations

A new reinforcement learning algorithm, Group Relative Policy Optimization (GRPO), employed in the DeepSeek-R1 model, is demonstrating significant advancements in enabling large language models (LLMs) to handle longer, more complex reasoning tasks. The algorithm reportedly resolves critical limitations inherent in traditional Proximal Policy Optimization (PPO) baselines, particularly concerning inference-time scaling and credit assignment for extended chains of thought. These insights were recently highlighted by Rohan Paul, outlining GRPO's mechanism and its impact on model performance.

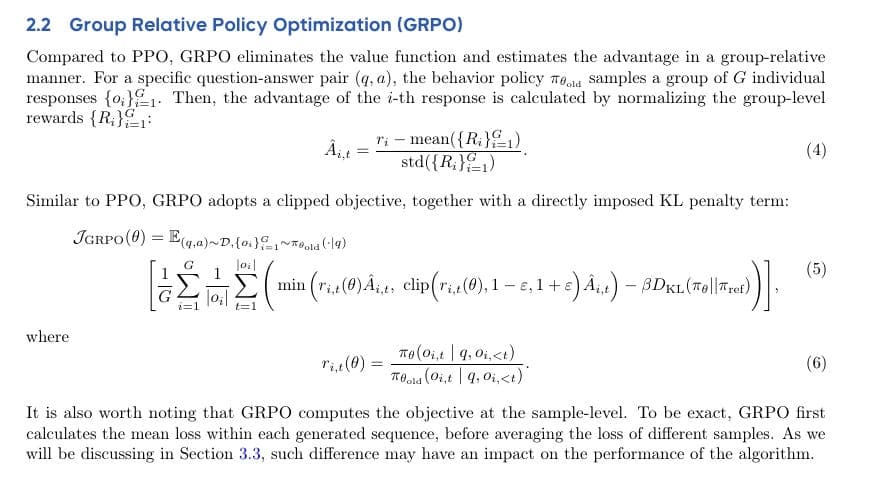

Traditional PPO methods often require a separate value model to estimate future rewards from partial text, a process that can be both "expensive and shaky when the only reward arrives at the very end," according to Paul. This reliance makes credit assignment for long chain-of-thought processes brittle, as early tokens are frequently revised, leading to unreliable partial predictions of answer quality. Furthermore, typical PPO applies a per-token KL penalty to a reference policy, which "implicitly pushes the policy toward shorter outputs" due to a growing total KL cost with length, effectively creating a "length tax."

GRPO addresses these challenges by fundamentally altering the optimization approach. It "removes that value model entirely," instead scoring whole sampled answers, which simplifies the credit assignment problem for long reasoning sequences. This method allows the model to learn that "more thinking" can be beneficial, as the length tax associated with per-token KL penalties is absent, replaced by a single, global KL term on the group of sampled answers. The DeepSeek-R1 paper details GRPO's group-based learning, which normalizes rewards within a group of multiple generated solutions, providing a stable and efficient way to optimize the policy without a large critic model.

The DeepSeek-R1 paper, which introduces the DeepSeek-R1-Zero and DeepSeek-R1 models, showcases the practical benefits of GRPO. During training, the model exhibited an increase in response length alongside improved accuracy, indicating the desired inference-time scaling behavior. This demonstrates that GRPO enables the model to effectively learn and utilize longer reasoning processes, leading to enhanced problem-solving capabilities.

In summary, GRPO's ability to score complete answers and apply a global KL term, rather than relying on a value model and token-wise KL penalties, allows for more robust credit assignment and encourages longer, more accurate reasoning from LLMs. This innovation, central to DeepSeek-R1, represents a notable step forward in overcoming a significant hurdle in the development of advanced reasoning capabilities in artificial intelligence.