Frontier AI Inference Caching Faces Implementation Gaps Despite Open-Source Component Availability

A recent social media post by DeepSeek enthusiast Teortaxes has sparked discussion within the artificial intelligence community, highlighting a perceived disparity between the availability of open-source components and the industry's progress in developing "frontier-grade" inference caching systems. The tweet, posted on September 4, 2025, suggested that current challenges in this area might stem from "skill issues" among major inference providers.

"it's a pretty unfortunate sign of skill issues, because it seems we have enough open-sourced components for a major inference provider to build a frontier-grade caching system. DeepSeek's own relies on 3FS and we have it, for example." Teortaxes stated in the tweet.

DeepSeek, a prominent Chinese AI company known for its open-source large language models (LLMs), utilizes its proprietary "3FS (Fire-Flyer File System)" as part of its robust infrastructure. This distributed parallel file system is specifically engineered for asynchronous random reads, where traditional data caching is deemed "useless" due to the non-reusable nature of each data read. While 3FS is a foundational element of DeepSeek's operational efficiency, it is distinct from the type of inference-specific caching systems discussed in the broader AI context.

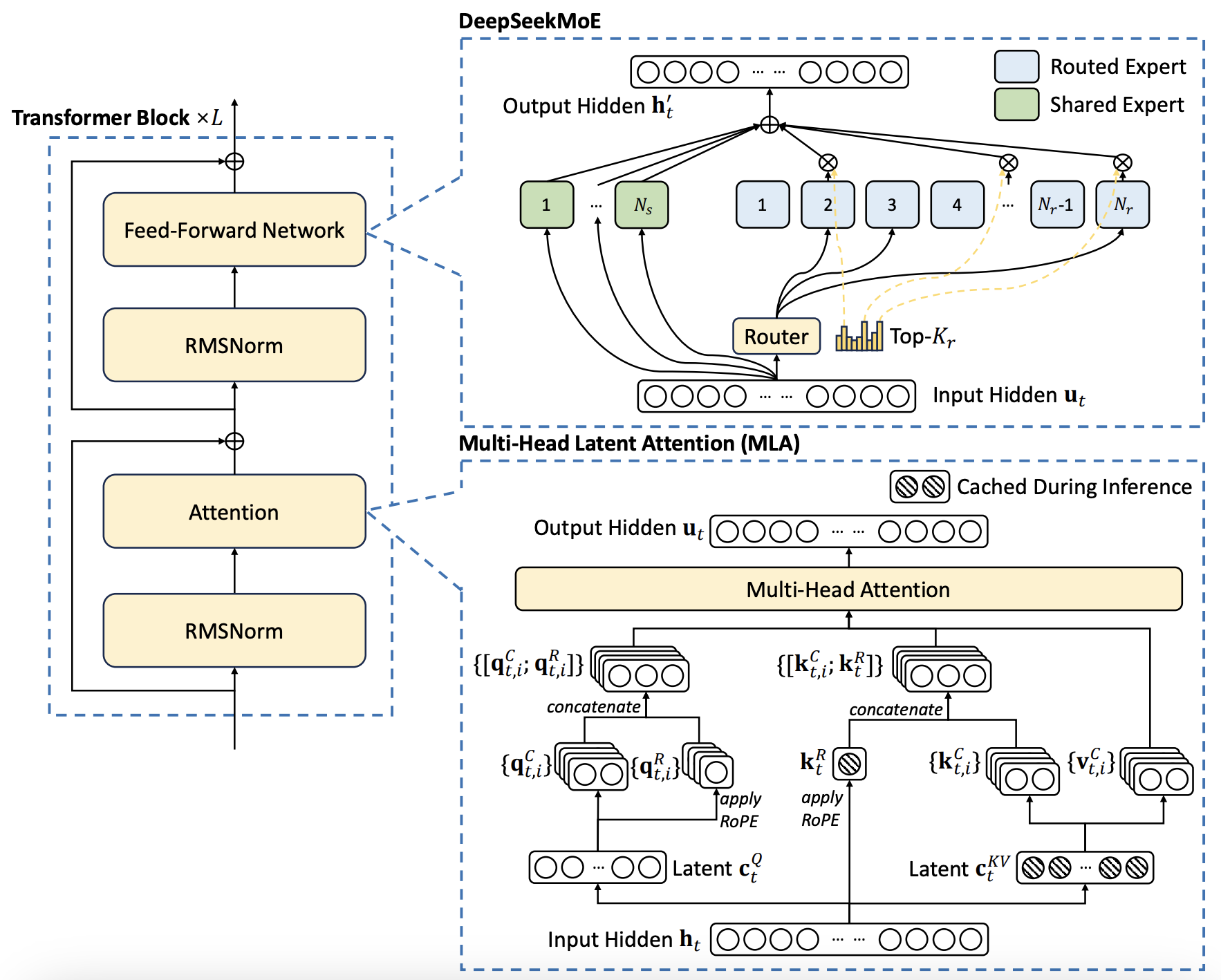

The development of advanced caching, particularly Key-Value (KV) caching, is crucial for optimizing large language model inference. These systems are designed to store and quickly retrieve previously computed vectors and data, significantly reducing latency and computational costs associated with repeated queries. As LLMs grow in complexity and context windows expand, efficient KV caching becomes indispensable for maintaining performance and managing the substantial burden on GPU infrastructure.

Despite the critical need, the tweet implies a lag in widespread adoption or effective implementation. The AI ecosystem offers a wealth of open-source tools and frameworks that could form the building blocks for such advanced caching solutions. Examples include components within Hugging Face's Text Generation Inference (TGI), PyTorch, and specialized software like LMCache, which provide functionalities adaptable for inference optimization.

The "skill issues" mentioned in the tweet suggest that the challenge may not lie in the absence of technology, but rather in the strategic integration and engineering expertise required to assemble these components into cohesive, high-performance caching systems. Major inference providers are under increasing pressure to deliver faster and more cost-efficient AI services, making the effective deployment of such caching mechanisms a key competitive differentiator in the rapidly evolving AI market.