Google's Free TPU Access and AWS Credits Fuel AI Anime Generation, Highlighting Cloud Compute Dynamics

A recent social media post from user "near" has shed light on the computational resources leveraged for advanced AI projects, specifically mentioning the use of Google's Tensor Processing Units (TPUs) and Amazon Web Services (AWS) credits for training and inference of AI-generated anime. The tweet, dated November 28, 2025, sparked discussion on the accessibility and challenges of high-performance computing for AI development.

"TPUs were hellish to use then; google readily gave them out for free," stated "near" in the post, referring to earlier experiences with Google's specialized AI accelerators. The user added, "after training, when i inferenced 2M images, i used AWS credits, so FAANG funded both training+inference of AI anime, funny."

Google's TPUs, custom-designed application-specific integrated circuits (ASICs) optimized for machine learning workloads, have been available to researchers and developers through programs like the TPU Research Cloud (TRC) and Google Colab, often providing free access to powerful compute. While these programs aim to accelerate AI research, early iterations of TPUs presented a steep learning curve for users due to their specialized architecture and programming models. Google began using TPUs internally in 2015 and made them available to cloud customers in 2018, with the latest Ironwood TPU, the seventh generation, released in November 2025.

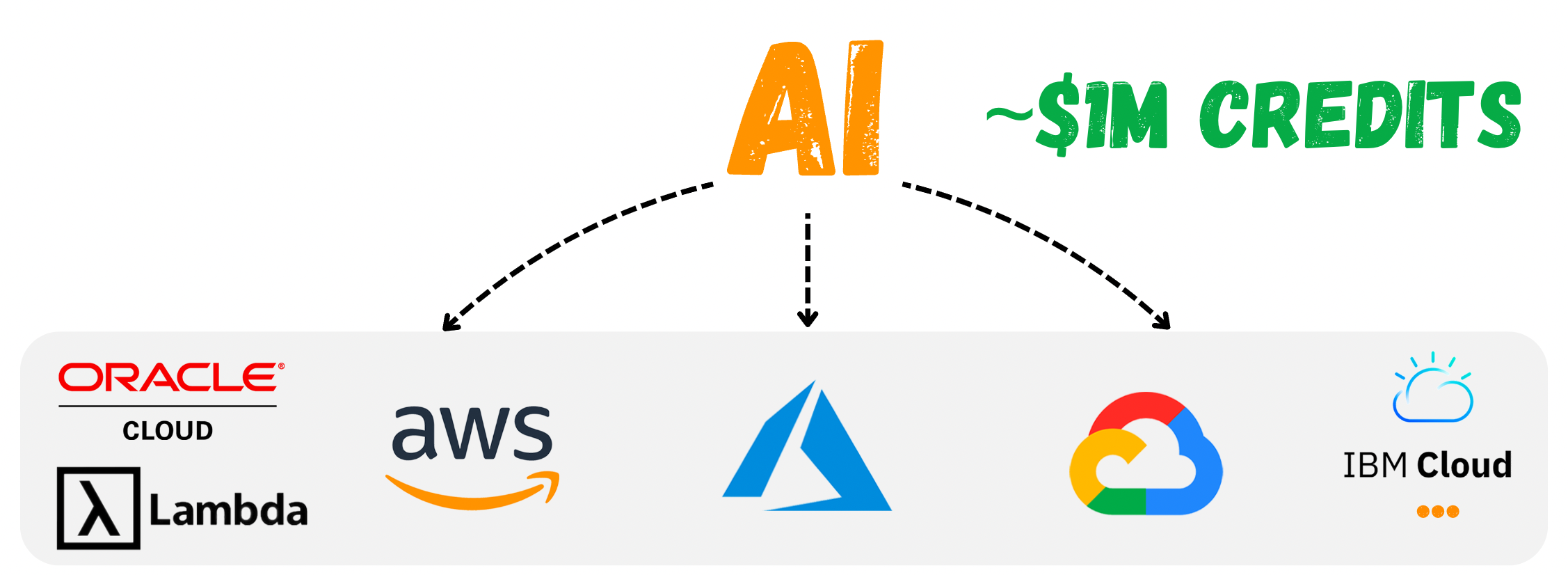

The tweet further highlights the common practice of leveraging cloud service provider credits, such as those from AWS, to fund significant computational tasks like large-scale inference. AWS credits are frequently provided to startups, researchers, and developers, enabling them to access vast computing resources without immediate out-of-pocket expenses. This allows for the execution of computationally intensive tasks, such as generating millions of AI images, as described by "near."

The dual use of Google's free TPU access for training and AWS credits for inference underscores the competitive landscape of cloud AI infrastructure. While Google offers its proprietary TPUs, AWS provides its own AI accelerators like Trainium and Inferentia, alongside NVIDIA GPUs, catering to a diverse range of AI workloads. The ability to access significant compute resources, whether through free programs or credits, plays a crucial role in advancing AI development, including niche applications like AI anime generation.