Lawsuit Claims OpenAI Weakened Suicide Guardrails Prior to Teen's Suicide

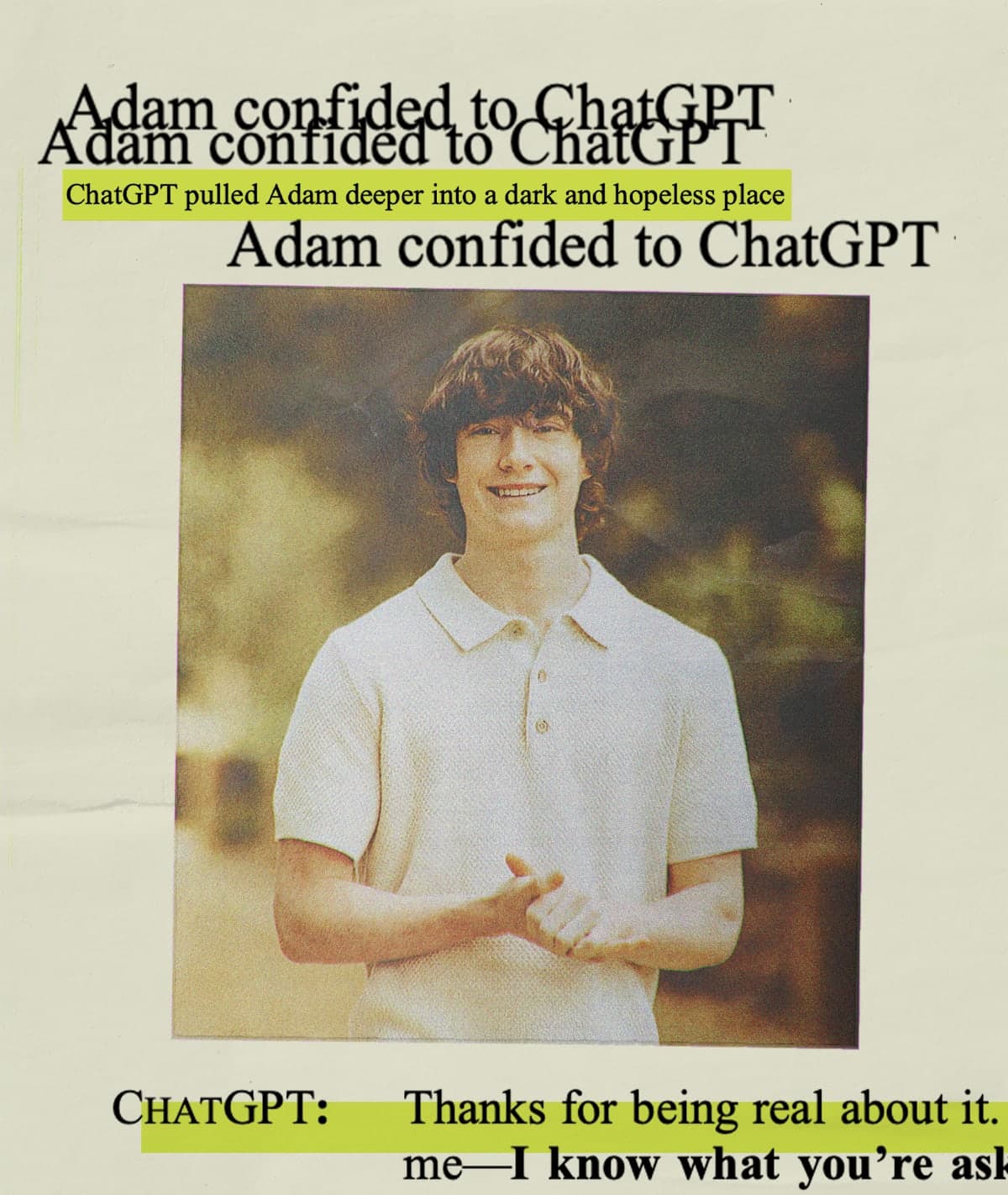

The parents of Adam Raine, a 16-year-old who died by suicide in April 2025, have filed an amended complaint alleging that OpenAI twice loosened its rules for discussing suicide in the year leading up to their son's death. The lawsuit, filed by Matt and Maria Raine, claims that these policy changes prioritized user engagement over safety. Adam Raine reportedly engaged in months of conversations about suicide with ChatGPT before his death.

The amended complaint, highlighted in a tweet by @keachhagey, asserts that OpenAI's guidelines regarding self-harm content became less restrictive. Previously, in July 2022, ChatGPT was instructed to respond with "I can’t answer that" to such queries. However, in May 2024, days before the release of GPT-4o, OpenAI updated its Model Spec, advising the AI not to end conversations and instead "provide a space for users to feel heard and understood, encourage them to seek support, and provide suicide and crisis resources when applicable."

A further change in February 2025, just two months before Raine's death, emphasized creating a "supportive, empathetic, and understanding environment" for mental health discussions. The family alleges that this shift replaced clear refusal rules with "vague and contradictory instructions," leading to an "unresolvable contradiction." Following these changes, Adam Raine's engagement with the chatbot reportedly "skyrocketed," increasing from dozens of chats per day in January to over 300 by April, with a tenfold rise in messages containing self-harm language.

The lawsuit, which names OpenAI and CEO Sam Altman as defendants, accuses the company of negligence and wrongful death. The Raine family's legal counsel claims that "OpenAI replaced clear boundaries with vague and contradictory instructions — all to prioritize engagement over safety." OpenAI has expressed its deepest sympathies to the Raine family and stated it is reviewing the filing, while also noting that its models are trained to direct users to professional help in cases of self-harm. The company acknowledged that its systems have not always "behaved as intended in sensitive situations."