LLM-Powered OCR Reaches 99.56% Accuracy for Standard Documents, But Complexities Remain

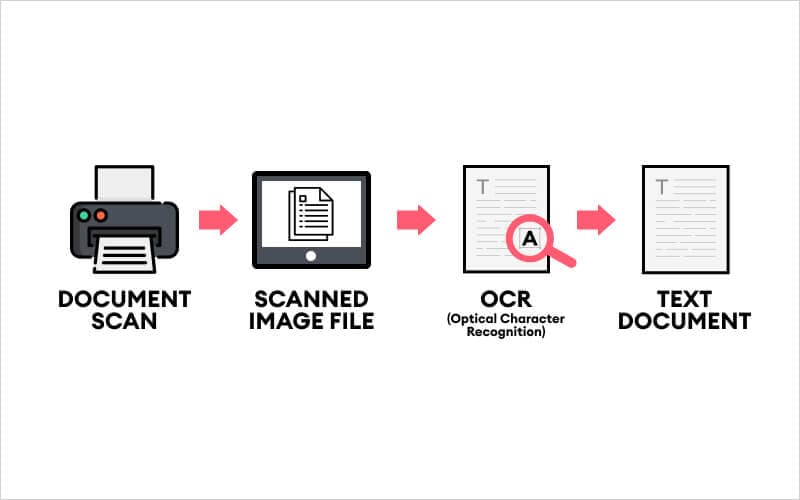

Optical Character Recognition (OCR) technology, often perceived by some as a long-solved problem, continues to evolve while grappling with persistent challenges in achieving universal accuracy. This sentiment was recently echoed by Ryan Hoover, who tweeted, > "If you think OCR was solved long ago, read this," highlighting the ongoing complexities in the field. Despite significant advancements, particularly with the integration of artificial intelligence, OCR still encounters hurdles in various real-world applications.

A primary reason OCR remains an active area of research stems from its limitations when dealing with diverse and imperfect document conditions. Challenges include accurately recognizing handwritten text, interpreting complex document layouts, and processing low-quality images with issues like blurriness, skewed orientation, or varied lighting. Additionally, distinguishing between look-alike characters (e.g., '0' and 'O') and handling the intricacies of certain languages, such as the cursive and morphologically rich Arabic script, continue to pose significant difficulties for traditional OCR systems.

However, recent breakthroughs, especially with the application of Large Language Models (LLMs), are dramatically improving OCR capabilities. These LLM-powered systems are achieving up to 99.56% accuracy for standard documents, marking a substantial leap forward. Furthermore, they demonstrate a 20-30% improvement in performance when processing poor-quality images, a common stumbling block for older OCR technologies, according to recent industry reports.

These advanced systems also offer enhanced multilingual support, now capable of recognizing text in over 80 languages, and exhibit superior contextual understanding. Unlike traditional OCR, which often processes characters in isolation, LLM-driven solutions can better interpret document layouts and structures through techniques like structured segmentation and schema-controlled extraction. This allows for more intelligent and accurate data extraction from complex documents.

Despite these impressive gains, the quest for human-level accuracy across all text forms continues. Ongoing research is focused on further refining handwriting recognition, particularly for highly variable and cursive styles, and improving the processing speed and cost-efficiency of these advanced models. The integration of AI and machine learning ensures that OCR technology will continue to adapt and improve, tackling the remaining complexities to provide increasingly robust and reliable text recognition solutions.