Memory Bandwidth Becomes Critical Bottleneck for Generative AI Growth

The rapid expansion of generative AI (GenAI) capabilities is increasingly constrained by a fundamental limitation in hardware memory bandwidth, creating a significant "memory wall" that impedes the progress of large language models (LLMs). This bottleneck stems from a profound mismatch in scaling rates between computational power and memory access speeds over the past two decades. Rohan Paul, a prominent voice in the field, highlighted this disparity, stating, "Hardware Memory bandwidth is becoming the choke point slowing down GenAI."

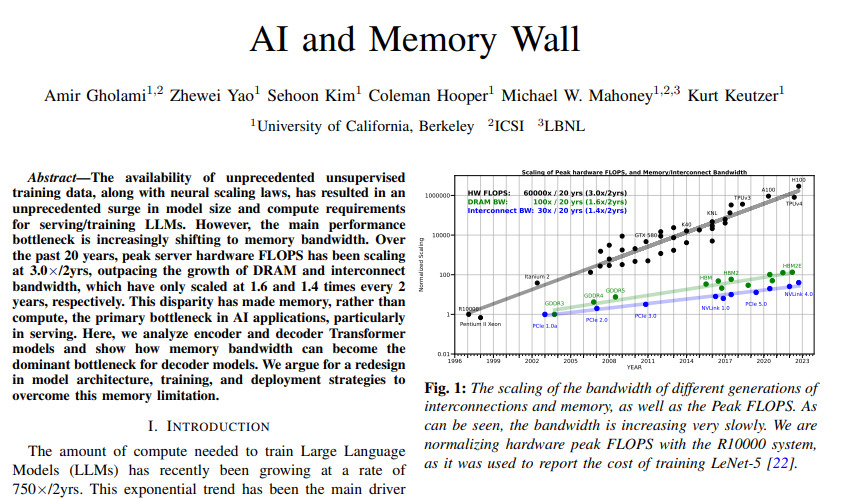

Between 2018 and 2022, transformer model sizes surged by approximately 410 times every two years, while memory per accelerator only doubled in the same period. This imbalance means that even with vast computational power, chips struggle to feed data fast enough from memory to processing units, leading to idle processors. Over the last 20 years, peak compute has risen by an astounding 60,000 times, contrasting sharply with DRAM bandwidth increasing by only 100 times and interconnect bandwidth by 30 times.

The "memory wall" presents distinct challenges across different deployment environments. In datacenters, current efforts primarily focus on applying more GPU compute power, with accelerator roadmaps emphasizing High Bandwidth Memory (HBM) capacity and bandwidth scaling, KV cache offload, and prefill-decode disaggregation. However, for edge AI applications, the tweet notes a critical lack of effective solutions, indicating a significant hurdle for pervasive AI.

This bandwidth limitation particularly impacts decoder-style LLM inference, which generates one token at a time. Each step reuses the same weights but must stream a growing Key-Value (KV) cache from memory, resulting in low arithmetic intensity. As the context expands, the KV cache grows linearly with sequence length and layer count, requiring more KV tensors to be read for every new token, thus dominating data movement.

Consequently, much of the recent research is shifting focus from merely adding FLOPs (floating-point operations) to reducing or reorganizing KV movement. Training LLMs further exacerbates memory demands, often requiring three to four times more memory than just parameters to hold gradients, optimizer states, and activations. This significant bandwidth gap, where moving weights, activations, and KV-cache is slower than raw compute, ultimately dictates the runtime and cost for modern LLMs.