The Elusive Definition of Artificial General Intelligence Persists Amidst Advancing AI

Artificial General Intelligence (AGI), the hypothetical ability of a machine to perform any intellectual task a human can, remains a subject of intense debate and evolving definition within the artificial intelligence community. Experts and researchers grapple with precisely what constitutes AGI, even as current AI systems demonstrate increasingly sophisticated capabilities. This ongoing definitional fluidity shapes public perception and research direction for what many consider the ultimate goal of AI development.

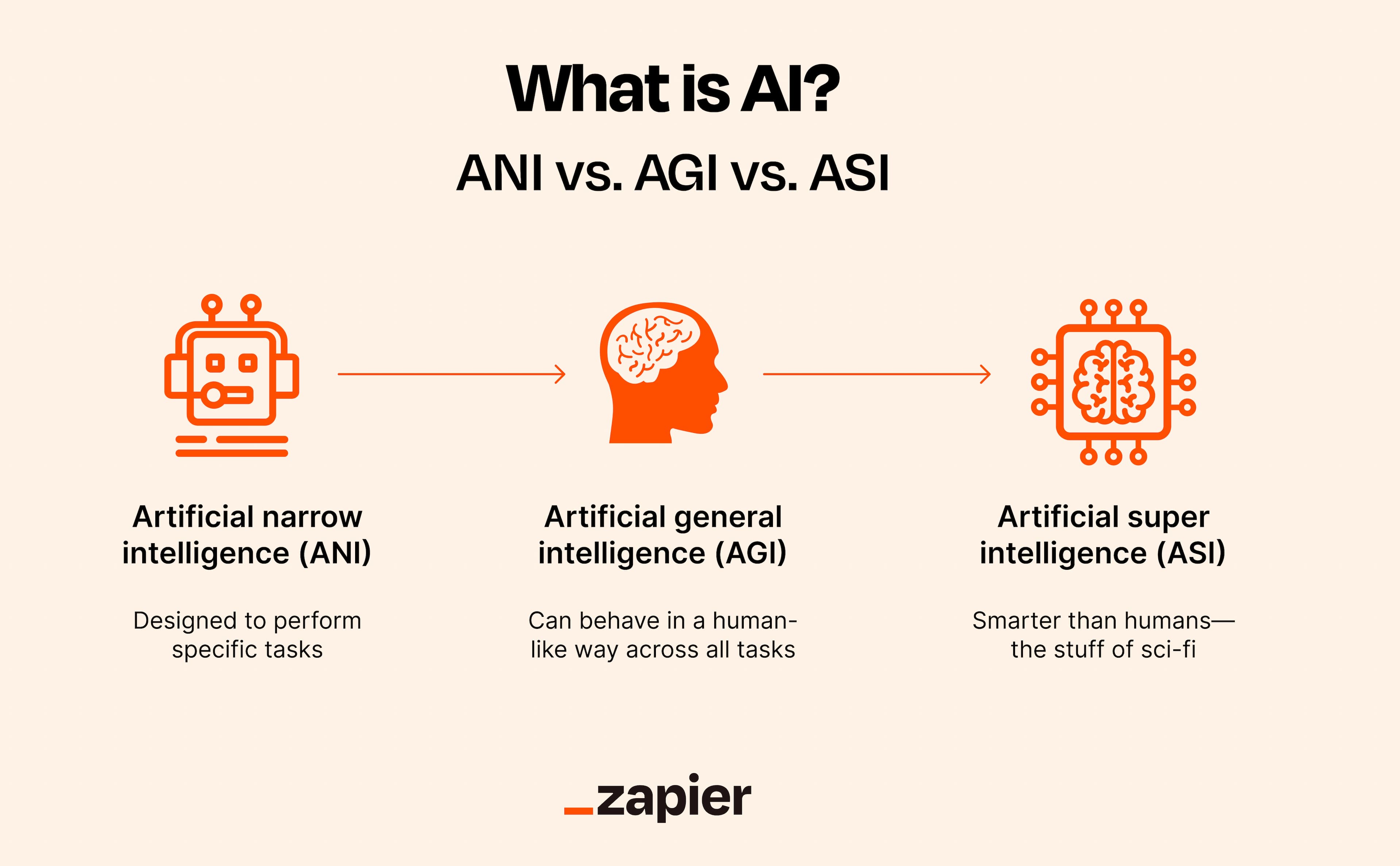

AGI is broadly understood as a theoretical stage where AI systems possess human-like cognitive abilities across diverse tasks, distinguishing it from Artificial Narrow Intelligence (ANI) which excels in specific domains. The term itself was popularized by AI researcher Ben Goertzel in 2007, though earlier uses exist. Despite decades of research, a universal consensus on a precise definition for AGI continues to elude the field, leading to varied interpretations.

A core challenge highlighted by commentator David Shapiro is the phenomenon of "moving goalposts" in defining AGI. Shapiro stated in a recent tweet, > "The reason that AGI is such a sticky term is because need a word for 'maximally intelligent machines that we are working on building'." He further explained, > "Every time we come up with some miraculous level of machine intelligence, you can always say 'well that's not the MOST intelligent a machine can be'." This suggests that as AI achieves new benchmarks, the benchmark for "general" intelligence is often recalibrated.

This perpetual redefinition leads to diverse societal reactions, ranging from alarm to optimism and skepticism. Shapiro noted that for some, the concept of AGI is a "cause for alarm," while for others, it inspires "optimism." The idea of AGI as a "maximally powerful machine" often takes on "mythic and archetypal" roles, being perceived as "intrinsically godlike" in its potential for omniscience, omnipotence, and omnipresence. This philosophical dimension underscores the profound impact AGI's eventual realization, or lack thereof, could have.

While large language models (LLMs) represent significant strides in AI, they are generally considered steps towards AGI rather than AGI itself, as their competence is still confined to specific tasks or domains. Most researchers believe true AGI is still decades away, if achievable at all. As Shapiro concluded, until a better term emerges for "the ultimate evolution of machine intelligence," the concept of AGI will persist, always seeming "just out of reach. Just around the corner."