Trillion-Parameter AI Models Showcase Exponential Scaling in H100 Clusters

A recent social media post by John Palmer has highlighted the stark contrast and rapid advancements in large language model (LLM) development, illustrating the exponential scaling achieved with Mixture-of-Experts (MoE) architectures on advanced hardware like NVIDIA H100 clusters. The post detailed an interaction between two models training concurrently: a 7-billion parameter model and a colossal 1.8-trillion parameter MoE model.

Palmer's tweet captured the essence of this technological leap, quoting the smaller model's bewildered query: > “How the fuck did you get so smart???” to which the state-of-the-art model replied, > “I ingest high-quality tokens until my loss curve flattens… once I stop hallucinating, I add more synthetic data and increase the compute budget. I’ve been scaling this up since ‘Attention Is All You Need’ dropped in 2017.” This exchange underscores the relentless pursuit of scale in AI.

Mixture-of-Experts models, such as the rumored 1.8 trillion parameter GPT-4 or Mistral's Mixtral 8x7B, employ a sparse activation mechanism where only a subset of "expert" networks is engaged for any given input. This architecture allows for significantly larger models without a proportional increase in computational cost during inference, making trillion-parameter scale feasible. Experts suggest that MoE models offer improved training scaling, faster inference, and enhanced accuracy compared to dense models of similar compute budgets.

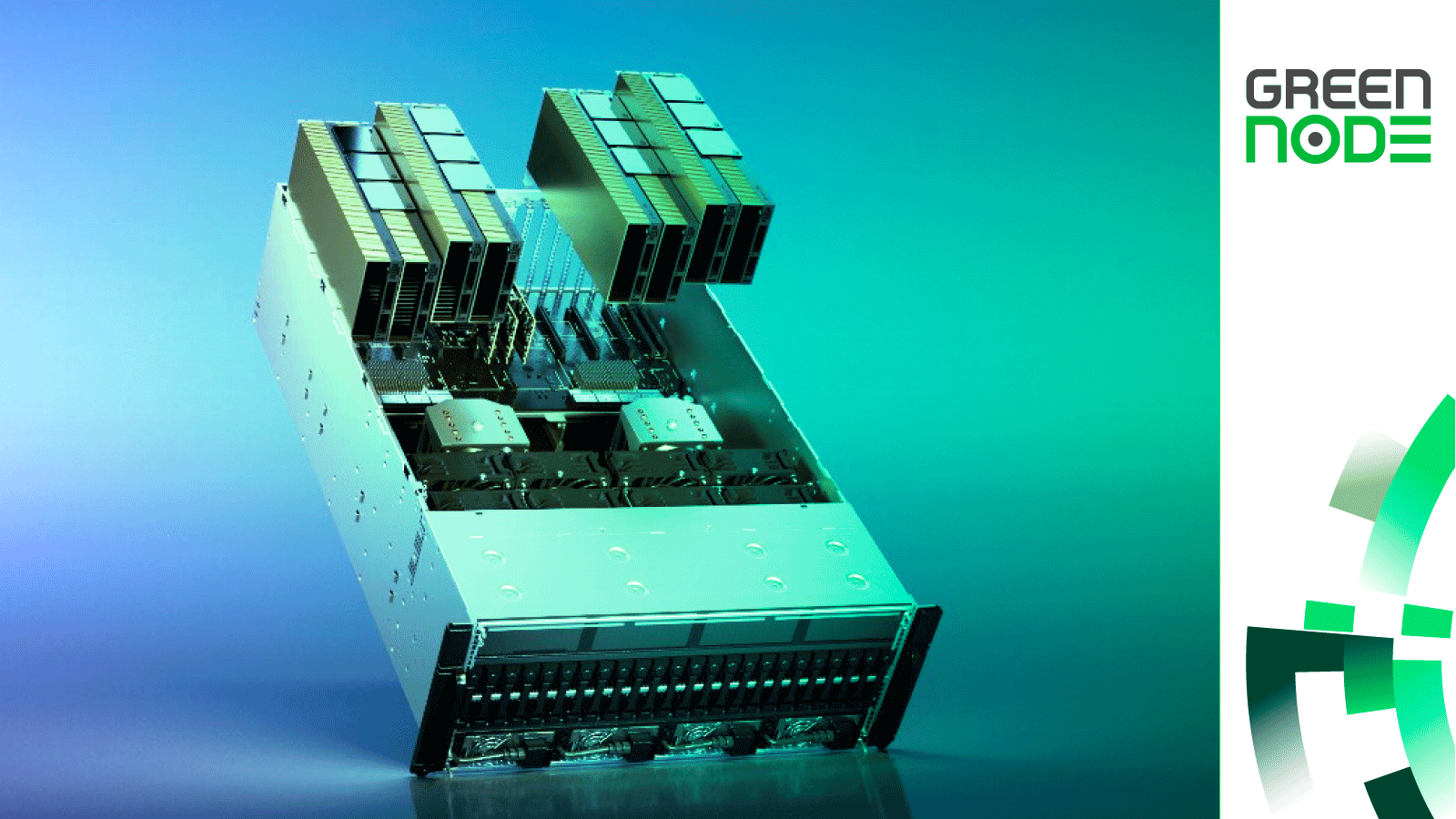

The training of such massive models relies heavily on powerful hardware infrastructure, with NVIDIA H100 GPUs being a cornerstone for high-performance AI computation. These clusters provide the necessary processing power and memory bandwidth to handle the immense data and parameter counts involved in training models that can exceed one trillion parameters. The continuous evolution of GPU technology, including the upcoming NVIDIA Blackwell platform, further promises to optimize inference throughput and user interactivity for these complex models.

The foundational shift in AI development, as referenced by the 1.8-trillion parameter model, traces back to the 2017 paper "Attention Is All You Need." This seminal work introduced the Transformer architecture, which revolutionized natural language processing and paved the way for the current generation of large language models. The principle of scaling, by continually increasing data quality, compute budget, and model parameters, has been a driving force behind the dramatic improvements observed in AI capabilities over the past several years.