AI-Generated Code Sparks 'Write Never, Read Never' Debate, Raising Concerns for Software Readability

A recent social media post by programmer and writer Alice Maz has ignited a discussion within the software development community, humorously suggesting that advancements in Artificial Intelligence are leading towards a future of "write never, read never" programming. Her observation underscores growing concerns about the implications of AI code generation for code readability, maintainability, and the role of human developers.

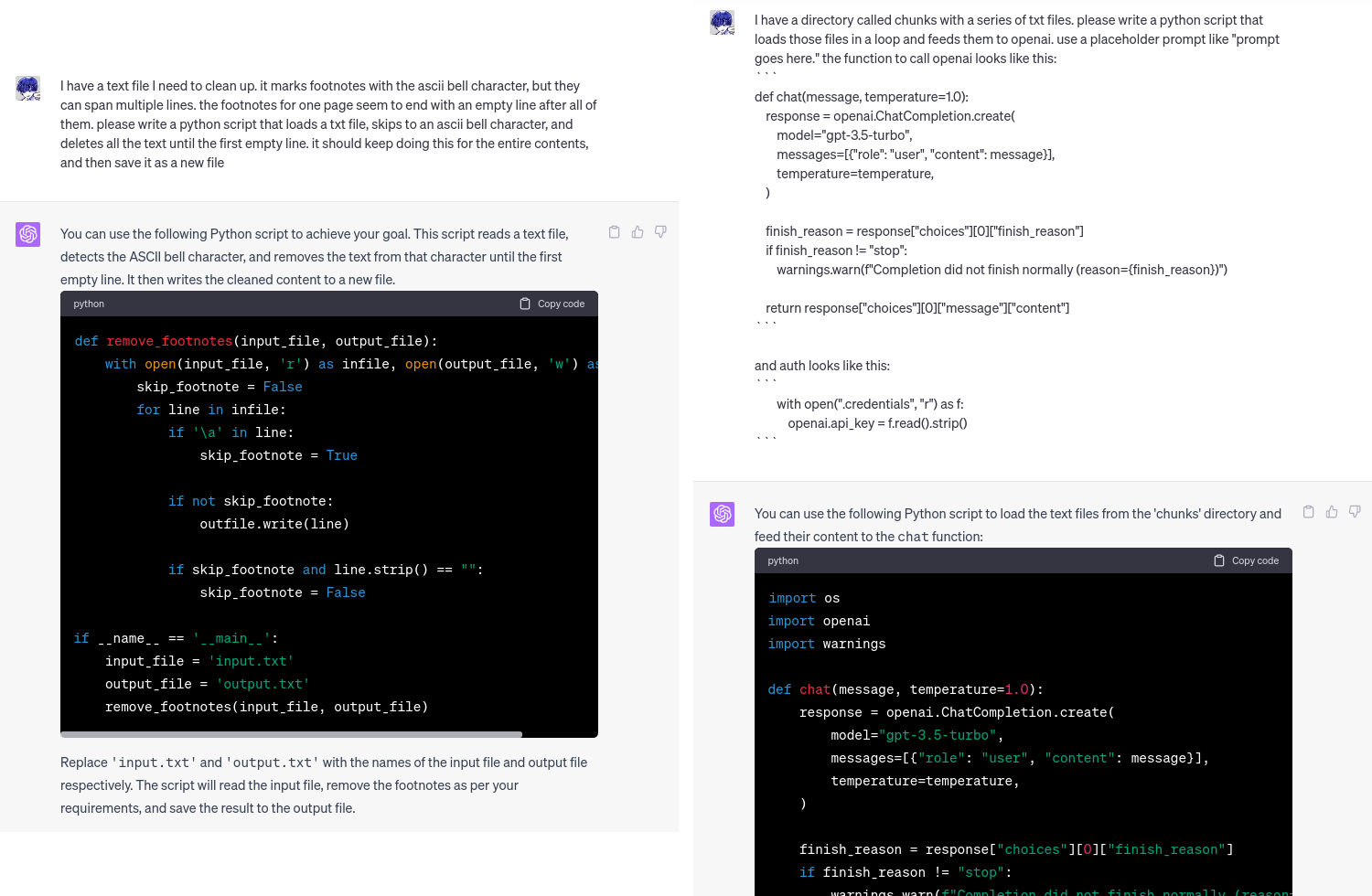

Maz's tweet stated: > "the old joke about perl is that it was 'write once read never' but thanks to ai we have achieved 'write never read never' programming." This quip references the long-standing jest about the Perl programming language, known for its capacity to produce highly compact yet often inscrutable code. The updated joke posits that AI tools might automate both the creation and eventual understanding of code, potentially bypassing human involvement entirely.

While AI code generation tools are lauded for enhancing developer productivity, their impact on code quality remains a subject of ongoing study. Research from 2025 indicates that AI-generated code, particularly from models like Claude-3.5 and ChatGPT-4, can exhibit higher clarity, structure, and conciseness compared to human-written code in certain contexts. However, these studies also highlight that AI-produced code may lack the nuanced context, robust error handling, and adherence to specific architectural principles that human developers typically provide.

Alice Maz herself has articulated a nuanced perspective on AI, describing it as a "lever" for augmenting human capabilities and automating repetitive tasks rather than a direct replacement for creative problem-solving. She has drawn parallels between current skepticism towards AI and historical resistance to new programming tools like compilers, emphasizing the need for adaptation and understanding how to effectively "dance with the tool."

Despite reported productivity boosts of 20% to 45% for developers utilizing AI coding assistants, experts caution that these gains can be offset by the time required for debugging, refactoring, and ensuring architectural alignment. The quality of AI-generated code varies significantly across different models and programming languages, with some tools struggling to adhere to specific industry standards or integrate seamlessly into complex legacy systems. The ongoing debate centers on how to best harness AI's power while preserving critical human oversight and ensuring the long-term health and comprehensibility of software.