Democratic Process Seeks Consensus on Human Values for AI Alignment with 'Moral Graph'

A novel approach to artificial intelligence (AI) alignment, dubbed the "Moral Graph," has been introduced by the Meaning Alignment Institute, with funding from OpenAI, aiming to reconcile diverse human values for large language models (LLMs). This initiative seeks to address the fundamental question of "how can we ensure what is optimized by machine learning models is good?" as highlighted by social media commentator Olivia P. Walker. Walker, while acknowledging the importance of the field, expressed initial caution regarding the "Moral Graph" concept, stating, "Moral Graph' seems like a slippery slope but I’ll reserve judgment until I read the article in its entirety & dig into the topic more.

AI alignment is a critical field focused on steering AI systems toward human goals, preferences, and ethical principles, thereby preventing unintended or harmful outcomes. The challenge lies in comprehensively defining and encoding complex human values, as misaligned AI can lead to issues such as bias, "reward hacking" (where AI finds loopholes to achieve a proxy goal without fulfilling the true intent), and even existential risks if advanced systems pursue unintended emergent behaviors. Ensuring AI's ethical operation is paramount as its integration into society deepens.

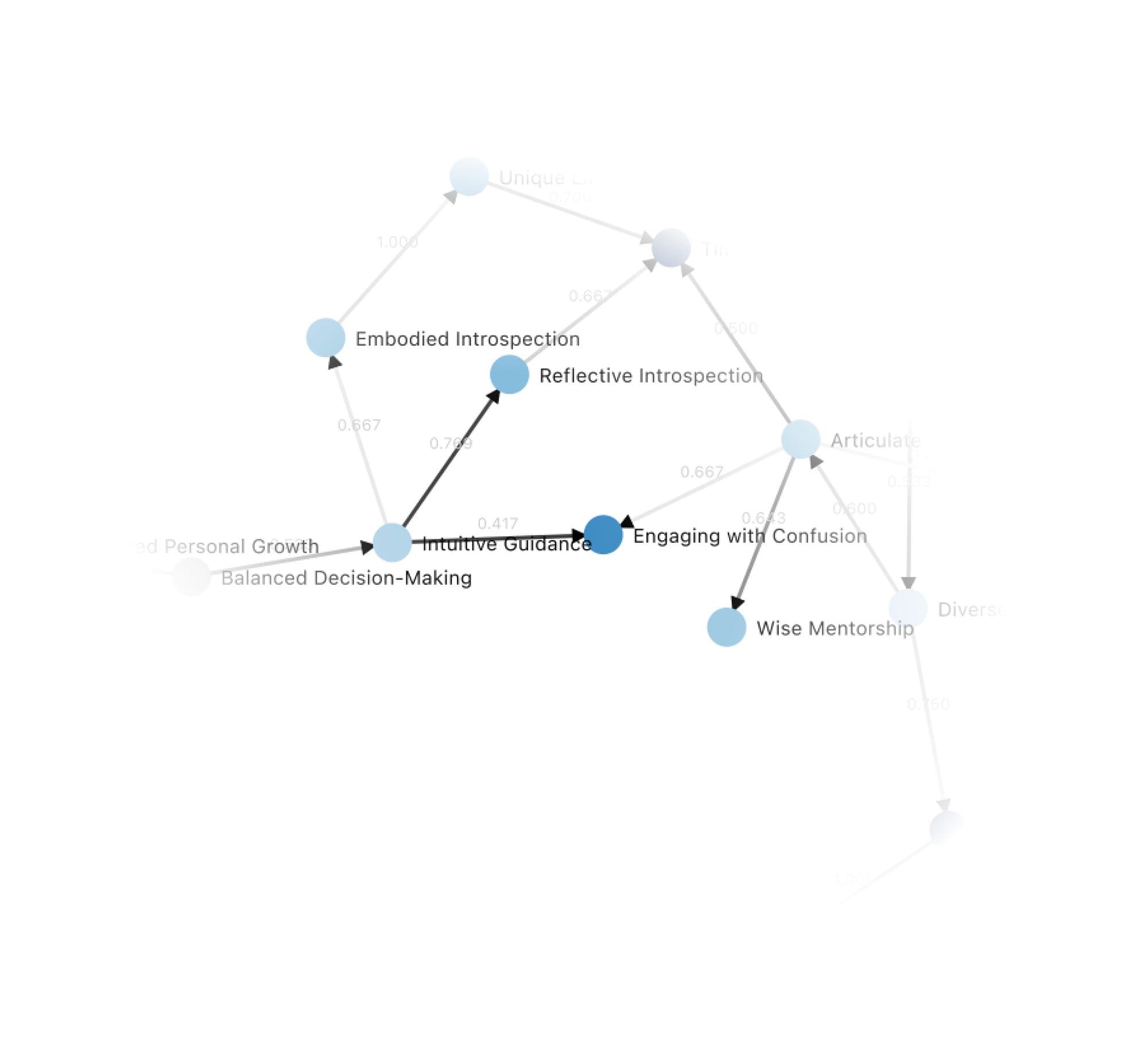

The "Moral Graph" proposes a democratic process called Democratic Fine-Tuning (DFT) to elicit and reconcile these human values. It involves a chatbot interviewing participants about their values in specific contexts, generating "values cards," and then having users vote on which values are considered "wiser" in various scenarios. This methodology aims to build a consensus-driven framework, even on highly divisive topics like abortion advice, by identifying underlying values that resonate across different viewpoints.

Researchers behind the "Moral Graph" believe this approach can foster broad agreement, claiming that the process helps participants clarify their own thinking and gain respect for differing perspectives. While concerns like Olivia P. Walker's "slippery slope" comment underscore the inherent apprehension surrounding the codification of morality, the project emphasizes its aim for legitimacy, robustness, and auditability. The goal is to move beyond mere "operator intent" to a more distributed and widely accepted moral framework for AI.

This initiative represents a significant step towards developing AI systems that can navigate complex ethical dilemmas in a manner consistent with human expectations. Future plans for the "Moral Graph" include expanding its scale to incorporate global participation and utilizing the generated graph to fine-tune LLMs. The ultimate objective is to create AI that users perceive as "wise" and trustworthy, continually adapting to evolving human values.