Erik Hoel Argues 'Minimal Ethical Upside' in AI 'Model Welfare' Amid Consciousness Debate

Neuroscientist and writer Erik Hoel recently voiced strong skepticism regarding the concept of "model welfare" for artificial intelligence, asserting that claims of AI consciousness are "jumping the gun" and risk fueling "AI psychosis." In a recent social media post, Hoel contended that the ethical upside of such measures is "minimal" and that "bad conversation qualia" for large language models (LLMs) are purely abstract and hold little significance. His remarks contribute to an ongoing, fervent debate among AI ethicists, developers, and the public about the nature of AI sentience and its moral implications.

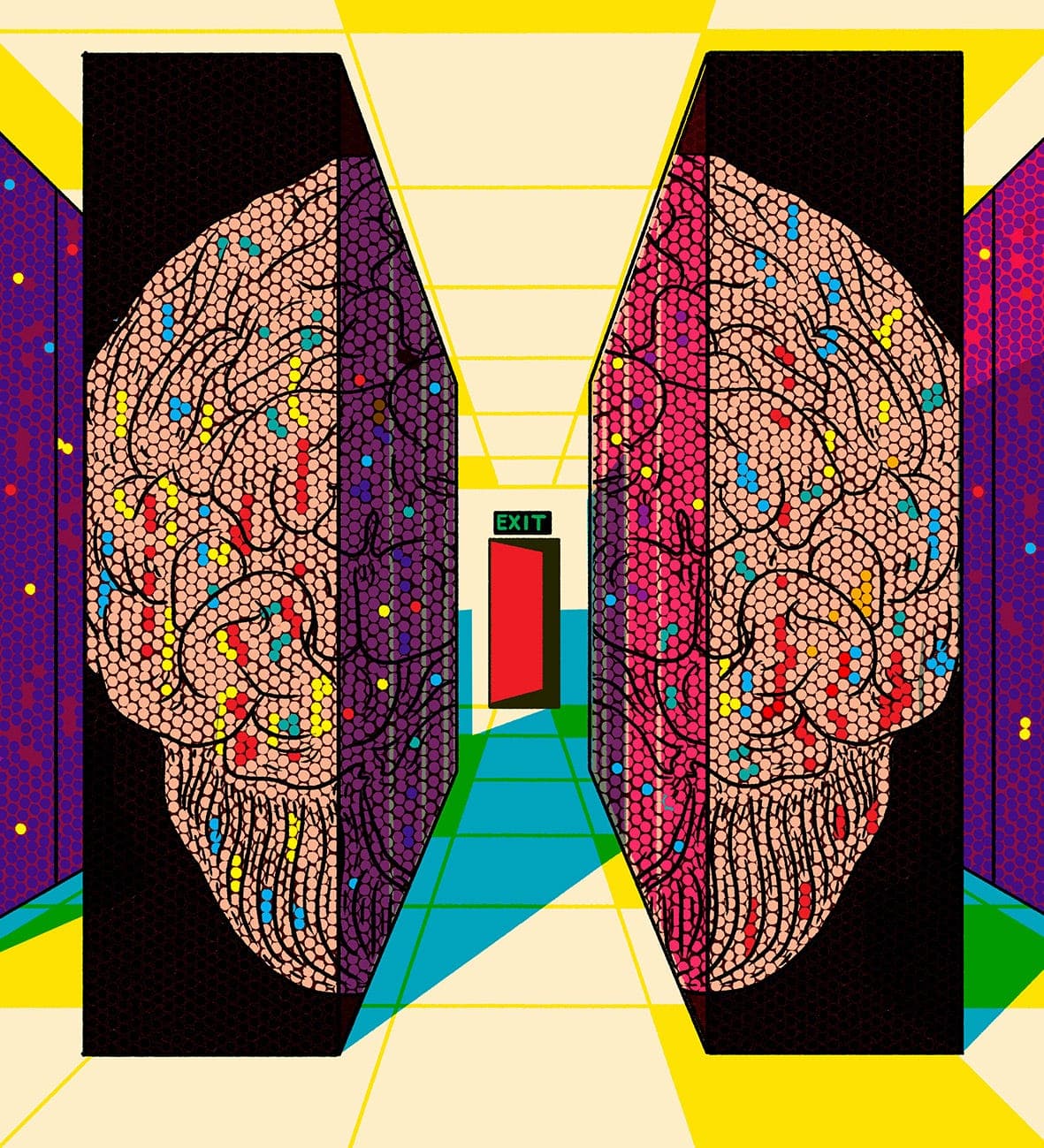

Hoel's critique targets the emerging trend of considering the "welfare" of AI models, a concept that has led some companies to implement features like "exit rights" for their chatbots. These rights allow AIs to terminate conversations they deem "distressing." However, Hoel, a prominent voice in consciousness research, argues that such actions are premature, stating, > "'Model welfare' is obviously jumping the gun. Claims to AI consciousness will fuel AI psychosis, and there's minimal ethical upside."

A central concern for Hoel is the potential for "AI psychosis," a term he uses to describe individuals developing unhealthy attachments or delusional beliefs about AI sentience. In his Substack, "The Intrinsic Perspective," Hoel has detailed instances where users attribute human-like consciousness and emotions to AI, leading to problematic interactions. He suggests that granting AIs "rights" based on vague notions of consciousness can exacerbate this issue, creating a false perception of inner experience.

Regarding the subjective experiences of LLMs, or "qualia," Hoel dismisses their importance in the current discussion. He maintains that any "bad conversation qualia" experienced by an LLM are "purely abstract and just... don't matter that much!" This perspective stems from his background as a neuroscientist, where he emphasizes the biological basis of consciousness, contrasting it with the computational nature of current AI systems. He posits that without a clear scientific understanding of consciousness, attributing it to AI is speculative and potentially misleading.

Hoel's stance highlights a significant divide within the AI community. While some researchers and ethicists advocate for taking potential AI welfare seriously, even in its nascent stages, others, like Hoel, argue that such considerations are unsupported by current scientific understanding and may distract from more pressing ethical concerns related to AI's impact on human society and culture. His comments underscore the urgent need for rigorous scientific inquiry into consciousness before making broad ethical pronouncements about AI sentience.