Google's Next-Gen TPU Delivers 42-Fold Compute Leap, Signaling Major AI Acceleration

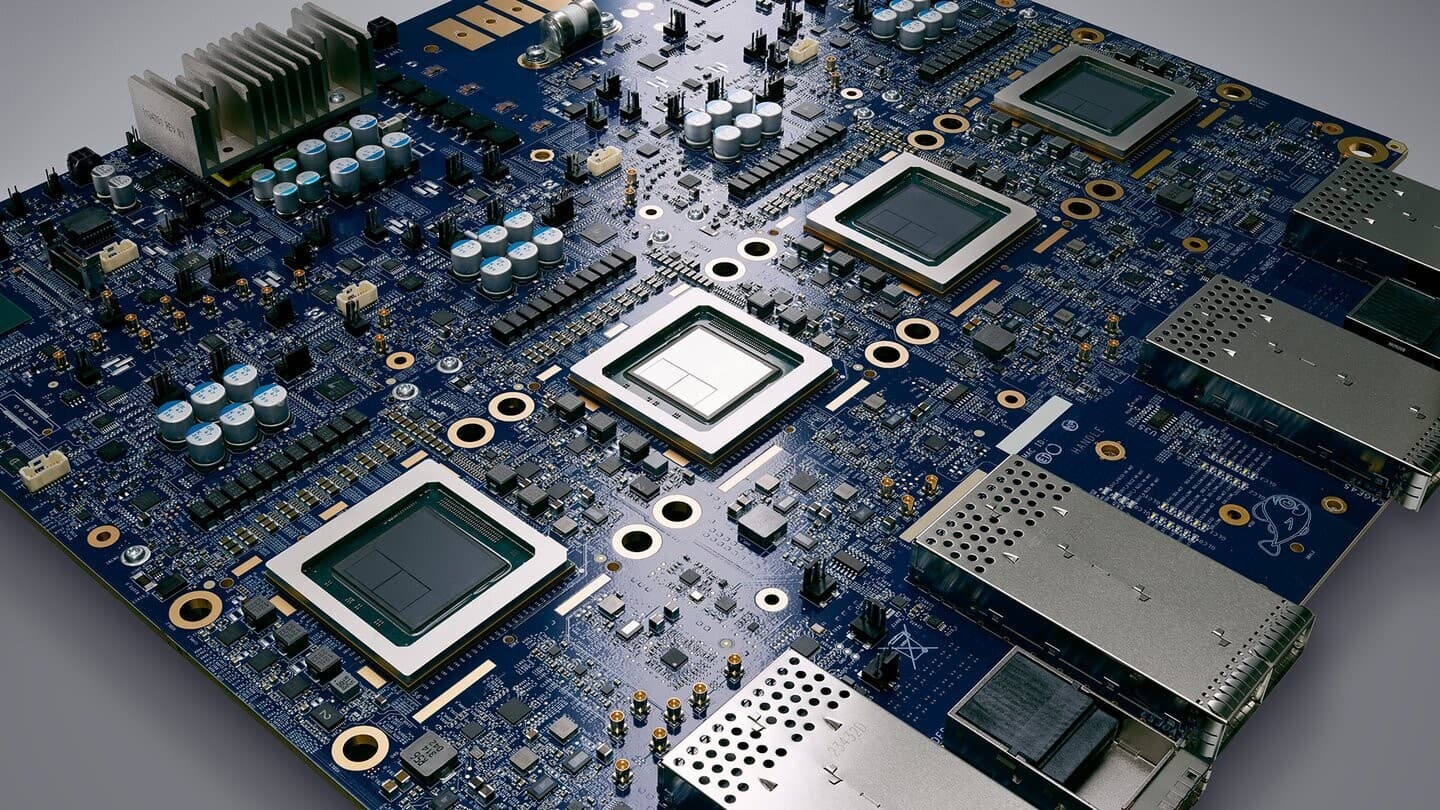

Google's Tensor Processing Units (TPUs) are undergoing a rapid evolution, with a recent social media post highlighting a staggering 42-fold increase in compute power from TPU v2 to a purported TPU v7. The advancement underscores Google's relentless pursuit of enhanced capabilities for artificial intelligence and machine learning workloads, crucial for training increasingly complex models. This significant leap in performance is set to redefine the boundaries of AI development. > "I remember being at Google and being excited to run a workload of 1 exaflop (full pod) on TPU v2. TPU v7 now has 42× more compute 🤯 insane," stated "Beff – e/acc" in a recent tweet, emphasizing the dramatic progress. An exaflop represents one quintillion (10^18) floating-point operations per second, a benchmark for supercomputing performance vital for modern AI. While specific public details on a "TPU v7" are limited, Google has consistently pushed its TPU generations, with its TPU v4 Pods already achieving over 1 exaflop of AI compute. Google's custom-designed TPUs are application-specific integrated circuits (ASICs) optimized for the matrix multiplications and convolutions prevalent in neural networks. These specialized processors offer superior performance and energy efficiency for AI tasks compared to general-purpose CPUs or GPUs. The continuous evolution, from the inference-focused TPU v1 to the training-centric TPU v2 and subsequent generations, has been instrumental in scaling AI research and applications. The company's latest publicly announced next-generation TPU, codenamed "Trillium," is slated for availability in late 2024. Trillium is designed to deliver a 4.7x improvement in peak compute per chip over TPU v4 and a 67% higher performance-per-dollar than TPU v5e. Such advancements are critical for the demanding computational requirements of large language models (LLMs) and other advanced AI systems. The 42x compute jump, whether referring to Trillium or an even newer internal iteration, signifies Google's aggressive roadmap in AI hardware. This exponential growth in TPU capabilities directly impacts the development of sophisticated AI models, enabling faster training times and the exploration of larger, more intricate neural network architectures. The ability to process vast datasets at exascale levels is foundational for breakthroughs in natural language processing, computer vision, and other AI domains. Google's ongoing investment in custom AI silicon positions it at the forefront of the global AI race, continuously pushing the limits of what artificial intelligence can achieve.