Google's TPUs Power Leading AI Models, Signaling Shift from NVIDIA Dominance

A recent social media post from user "signüll" on November 20, 2025, sparked discussion across the AI community, stating, "> "the best model right now was not trained on nvidia chips. that statement still feels really weird to say." This sentiment underscores a significant shift in the artificial intelligence hardware landscape, as Google's Tensor Processing Units (TPUs) are increasingly powering some of the most advanced AI models.

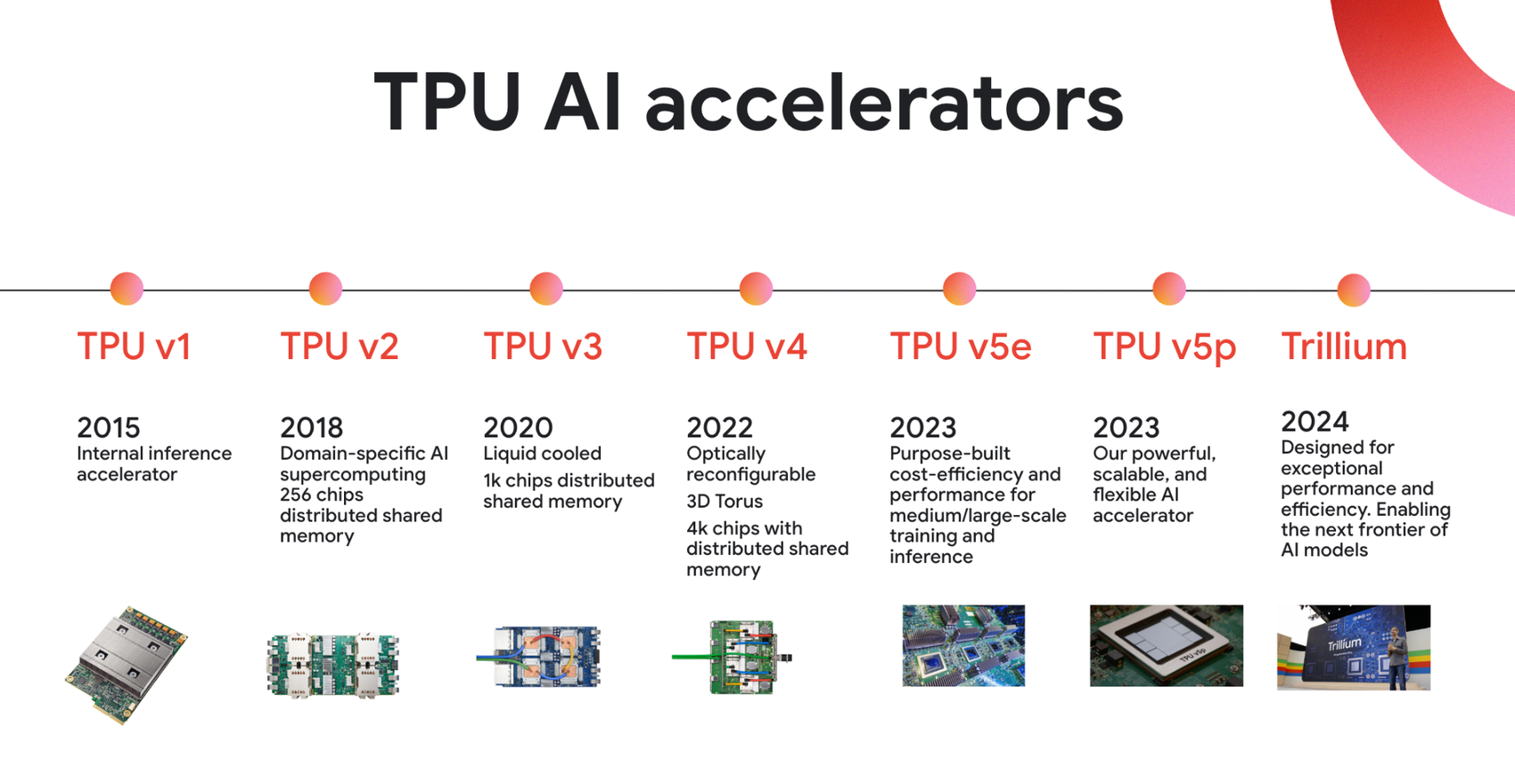

Google's Gemini 2.5 Pro, widely recognized as a top-performing AI model, was developed entirely on Google's in-house TPU infrastructure, specifically the "Trillium" TPU v6. This strategic decision by Google to leverage its custom silicon for both training and inference positions its hardware as a formidable alternative to NVIDIA's long-standing dominance in the AI accelerator market. The move highlights a growing trend among tech giants to develop specialized hardware tailored for their unique AI workloads.

Further emphasizing this evolving ecosystem, Apple has also reportedly bypassed NVIDIA GPUs for its internal foundation model training. According to recent disclosures, Apple utilized thousands of Google's TPU chips, including TPUv4 and TPUv5 pods, to build the advanced AI capabilities integrated into its Apple Intelligence features. This collaboration between two tech titans for critical AI infrastructure signals a notable shift in procurement strategies and hardware preferences.

Beyond Google's proprietary solutions, other players are also making significant strides. AMD's MI300X and the newer MI350X series, released in June 2025, are designed to directly compete with NVIDIA's Blackwell architecture, offering substantial memory capacity and competitive performance for specific workloads. Intel's Gaudi 3 chip has also emerged as a strong contender, claiming faster training and inference speeds compared to NVIDIA's H100, further diversifying the options available to AI developers and researchers.

This diversification in AI hardware reflects a broader industry push for greater efficiency, cost-effectiveness, and reduced reliance on a single vendor. As AI models continue to grow in complexity and scale, the demand for specialized, high-performance accelerators is driving innovation across multiple companies, challenging the established order and fostering a more competitive market.