Varied Interpretations of AGI Fuel Debate Among Researchers and Public

The concept of Artificial General Intelligence (AGI) is currently at the center of a significant definitional debate within the artificial intelligence community, leading to widespread confusion and frustration. A recent social media post by user Flowers ☾ highlighted this issue, stating, > "talking about AGI is so tiring and pointless because in one discussion it means being able to be a customer support agent about as good as a human worker and in the next discussion AGI is defined as solving quantum physics." This sentiment underscores the lack of a unified understanding of what AGI truly entails.

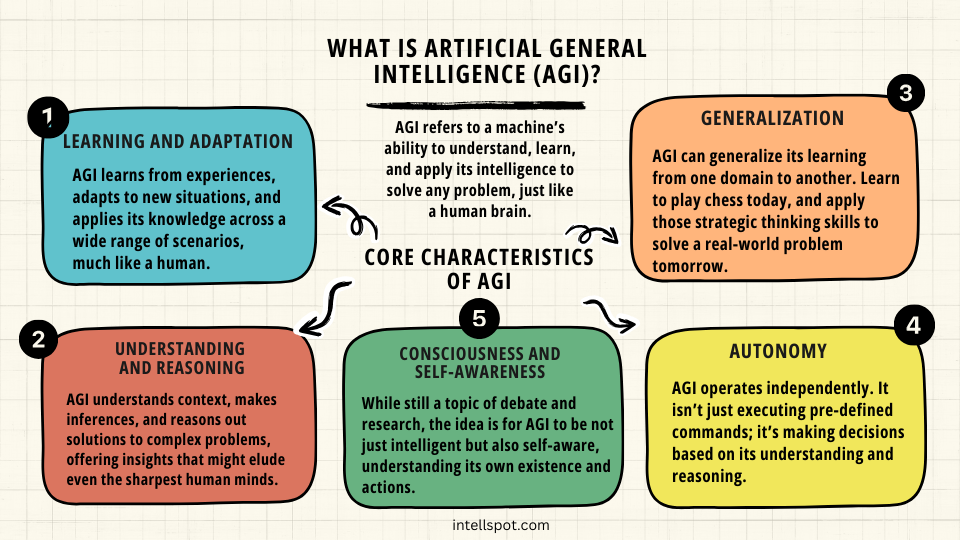

Experts and organizations frequently employ vastly different benchmarks for AGI. While some envision AGI as a system capable of performing any intellectual task a human can, others narrow the definition to systems that can handle most "economically valuable" cognitive tasks. This broad spectrum of interpretations means that claims of AGI's imminent arrival or even its current existence are met with skepticism, as the goalposts appear to constantly shift.

Historically, the term "AGI" was coined in the early 2000s to recapture the ambitious goals of early AI pioneers who envisioned machines with human-level general intelligence across all domains. However, as AI research progressed, the practical definition often narrowed. For instance, initial concepts included physical tasks like making coffee, but later definitions focused predominantly on cognitive abilities, leading to further divergence in understanding.

The ambiguity surrounding AGI's definition has significant implications for research, policy-making, and public perception. Without a clear and agreed-upon framework, assessing progress, regulating development, or even communicating effectively about AI's capabilities becomes challenging. This ongoing conceptual divide continues to be a central point of discussion and contention among scientists, tech leaders, and the general public alike.